#how to define the shape of numpy array

Explore tagged Tumblr posts

Text

The Best Open-Source Tools for Data Science in 2025

Data science in 2025 is thriving, driven by a robust ecosystem of open-source tools that empower professionals to extract insights, build predictive models, and deploy data-driven solutions at scale. This year, the landscape is more dynamic than ever, with established favorites and emerging contenders shaping how data scientists work. Here’s an in-depth look at the best open-source tools that are defining data science in 2025.

1. Python: The Universal Language of Data Science

Python remains the cornerstone of data science. Its intuitive syntax, extensive libraries, and active community make it the go-to language for everything from data wrangling to deep learning. Libraries such as NumPy and Pandas streamline numerical computations and data manipulation, while scikit-learn is the gold standard for classical machine learning tasks.

NumPy: Efficient array operations and mathematical functions.

Pandas: Powerful data structures (DataFrames) for cleaning, transforming, and analyzing structured data.

scikit-learn: Comprehensive suite for classification, regression, clustering, and model evaluation.

Python’s popularity is reflected in the 2025 Stack Overflow Developer Survey, with 53% of developers using it for data projects.

2. R and RStudio: Statistical Powerhouses

R continues to shine in academia and industries where statistical rigor is paramount. The RStudio IDE enhances productivity with features for scripting, debugging, and visualization. R’s package ecosystem—especially tidyverse for data manipulation and ggplot2 for visualization—remains unmatched for statistical analysis and custom plotting.

Shiny: Build interactive web applications directly from R.

CRAN: Over 18,000 packages for every conceivable statistical need.

R is favored by 36% of users, especially for advanced analytics and research.

3. Jupyter Notebooks and JupyterLab: Interactive Exploration

Jupyter Notebooks are indispensable for prototyping, sharing, and documenting data science workflows. They support live code (Python, R, Julia, and more), visualizations, and narrative text in a single document. JupyterLab, the next-generation interface, offers enhanced collaboration and modularity.

Over 15 million notebooks hosted as of 2025, with 80% of data analysts using them regularly.

4. Apache Spark: Big Data at Lightning Speed

As data volumes grow, Apache Spark stands out for its ability to process massive datasets rapidly, both in batch and real-time. Spark’s distributed architecture, support for SQL, machine learning (MLlib), and compatibility with Python, R, Scala, and Java make it a staple for big data analytics.

65% increase in Spark adoption since 2023, reflecting its scalability and performance.

5. TensorFlow and PyTorch: Deep Learning Titans

For machine learning and AI, TensorFlow and PyTorch dominate. Both offer flexible APIs for building and training neural networks, with strong community support and integration with cloud platforms.

TensorFlow: Preferred for production-grade models and scalability; used by over 33% of ML professionals.

PyTorch: Valued for its dynamic computation graph and ease of experimentation, especially in research settings.

6. Data Visualization: Plotly, D3.js, and Apache Superset

Effective data storytelling relies on compelling visualizations:

Plotly: Python-based, supports interactive and publication-quality charts; easy for both static and dynamic visualizations.

D3.js: JavaScript library for highly customizable, web-based visualizations; ideal for specialists seeking full control.

Apache Superset: Open-source dashboarding platform for interactive, scalable visual analytics; increasingly adopted for enterprise BI.

Tableau Public, though not fully open-source, is also popular for sharing interactive visualizations with a broad audience.

7. Pandas: The Data Wrangling Workhorse

Pandas remains the backbone of data manipulation in Python, powering up to 90% of data wrangling tasks. Its DataFrame structure simplifies complex operations, making it essential for cleaning, transforming, and analyzing large datasets.

8. Scikit-learn: Machine Learning Made Simple

scikit-learn is the default choice for classical machine learning. Its consistent API, extensive documentation, and wide range of algorithms make it ideal for tasks such as classification, regression, clustering, and model validation.

9. Apache Airflow: Workflow Orchestration

As data pipelines become more complex, Apache Airflow has emerged as the go-to tool for workflow automation and orchestration. Its user-friendly interface and scalability have driven a 35% surge in adoption among data engineers in the past year.

10. MLflow: Model Management and Experiment Tracking

MLflow streamlines the machine learning lifecycle, offering tools for experiment tracking, model packaging, and deployment. Over 60% of ML engineers use MLflow for its integration capabilities and ease of use in production environments.

11. Docker and Kubernetes: Reproducibility and Scalability

Containerization with Docker and orchestration via Kubernetes ensure that data science applications run consistently across environments. These tools are now standard for deploying models and scaling data-driven services in production.

12. Emerging Contenders: Streamlit and More

Streamlit: Rapidly build and deploy interactive data apps with minimal code, gaining popularity for internal dashboards and quick prototypes.

Redash: SQL-based visualization and dashboarding tool, ideal for teams needing quick insights from databases.

Kibana: Real-time data exploration and monitoring, especially for log analytics and anomaly detection.

Conclusion: The Open-Source Advantage in 2025

Open-source tools continue to drive innovation in data science, making advanced analytics accessible, scalable, and collaborative. Mastery of these tools is not just a technical advantage—it’s essential for staying competitive in a rapidly evolving field. Whether you’re a beginner or a seasoned professional, leveraging this ecosystem will unlock new possibilities and accelerate your journey from raw data to actionable insight.

The future of data science is open, and in 2025, these tools are your ticket to building smarter, faster, and more impactful solutions.

#python#r#rstudio#jupyternotebook#jupyterlab#apachespark#tensorflow#pytorch#plotly#d3js#apachesuperset#pandas#scikitlearn#apacheairflow#mlflow#docker#kubernetes#streamlit#redash#kibana#nschool academy#datascience

0 notes

Text

StableHLO & OpenXLA: Enhancing Hardware Portability for ML

JAX and OpenXLA: Methods and Theory

JAX, a Python numerical computing package with pytorch/XLA compilation and automated differentiation, optimises computations for CPUs, GPUs, and TPUs using OpenXLA.

Even though the Intel articles on JAX and OpenXLA do not define StableHLO, the context of OpenXLA's function suggests that it is related to the portability and stability of the Hardware Abstraction Layer (HAL) in the ecosystem. Intel Extension for OpenXLA with PJRT plug-in.

StableHLO likely matches the sources' scenario:

OpenXLA abstracts low-level hardware backends from high-level machine learning frameworks like JAX. This abstraction lets models operate on different hardware without code changes.

OpenXLA uses an intermediate representation (IR) to connect the backend (XLA compilers for specific hardware) and frontend (JAX).

This abstraction requires IR stability to perform properly and enable reliable deployment across devices. This IR change may break backend compilers and frontend frameworks.

We believe StableHLO is an OpenXLA versioned and standardised HLO (High-Level Optimiser) IR. With this standardisation and versioning, models compiled for a StableHLO version would work on compatible hardware backends that support that version.

Although the sources don't define StableHLO, OpenXLA's role as an abstraction layer with an intermediate representation implies that it's essential to the JAX and OpenXLA ecosystem for ensuring computation stability and portability across hardware targets. Hardware and software (JAX via OpenXLA) would have a solid contract.

To better understand StableHLO, you should read OpenXLA project and component documentation.

Understanding how JAX and OpenXLA interact, especially the compilation and execution cycle, helps Intel and other systems optimise performance. OpenXLA's role in backend-agnostic optimisation, JAX's staged compilation, and cross-device execution are highlighted.

Important topics

Core Functionality and Transformation System of JAX

JIT compilation, vectorisation, parallelisation, and automated differentiation (jax.grad) are added to NumPy by JAX.

These changes make JAX functions more efficient.

Jax.jit converts JAX functions into XLA computations, improving efficiency. “The jax.jit transformation in JAX optimises numerical computations by compiling Python functions that operate on JAX arrays into efficient, hardware-accelerated code using XLA.”

OpenXLA as a Backend-Agnostic Compiler

OpenXLA bridges hardware backends to JAX. The optimisation and intermediate representation pipeline is combined.

The jax.jit converter converts JAX code to OpenXLA HLO IR.

OpenXLA optimises this HLO IR and generates backend machine code.

“OpenXLA serves as a unifying compiler infrastructure that produces optimised machine code for CPUs, GPUs, and TPUs from JAX's computation graph in HLO.”

Compilation in stages in JAX

JAX-decorated functions employ staged compilation.Invoking jit requires a specified input shape and data type (abstract signature).

JAX watches the Python function's execution using abstract variables to describe the calculation.

This traced calculation then reaches the OpenXLA HLO IR.

OpenXLA optimises the HLO and generates target backend code.

Using the resulting code in subsequent calls with the same abstract signature will boost performance. “When a JAX-jitted function is called for the first time with a specific shape and dtype of inputs, JAX traces the sequence of operations, and OpenXLA compiles this computation graph into optimised machine code for the target device.”

CPU and GPU Execution Flow

How OpenXLA lets JAX regulate device computations.

OpenXLA optimises CPU machine code using SIMD and other architectural features.

In OpenXLA, data flows and kernel execution are maintained while the GPU handles calculations.

On GPUs, OpenXLA generates kernels for the GPU's parallel processing units.

This includes initiating and coordinating GPU kernels and managing CPU-GPU memory transfers.

Data management between devices using device buffers (jax.device_buffer.DeviceArray).

Understanding Abstract Signatures and Recompilation

The form and data type of input arguments determine a jax.jit-decorated function's abstract signature.

When a jitted function is called with inputs with a different abstract signature, JAX recompiles. Use consistent input shapes and data types to save compilation costs.

Intel Hardware/Software Optimisation Integration

Since the resources are on the Intel developer website, they likely demonstrate how JAX and OpenXLA may optimise Intel CPUs and GPUs.

This area includes optimised kernels, vectorisation on Intel architectures like AVX-512, and interaction with Intel-specific libraries or tools.

The jax.jit transformation in JAX employs XLA to turn Python functions that operate with JAX arrays into hardware-accelerated code, optimising numerical operations.

OpenXLA serves as a unified compiler infrastructure, converting JAX's compute graph (HLO) into optimised machine code for CPUs, GPUs, and TPUs.

When JAX-jitted functions are initially performed with a specific shape and dtype of inputs, JAX tracks the chain of operations. OpenXLA then compiles this processing graph into device-optimized machine code.

OpenXLA targets GPUs to generate kernels for the GPU's parallel processing units. Launching and synchronising GPU kernels and managing CPU-GPU data flows are required.

#technology#technews#govindhtech#news#technologynews#AI#artificial intelligence#StableHLO#OpenXLA#HLO#JAX#OpenXLA HLO#JAX and OpenXLA

0 notes

Text

Python: The Versatile Programming Language You Need to Learn

Introduction

Python has become one of the most popular programming languages in the world, and for good reason. Its simplicity, versatility, and powerful capabilities make it an ideal choice for both beginners and experienced developers. From web development to data science and artificial intelligence, Python is everywhere, shaping the future of technology. At TechnoComet Solutions, we leverage Python’s power to build innovative solutions for our clients. With a robust ecosystem of libraries and frameworks, Python continues to drive advancements across industries. As technology evolves, Python’s role in emerging fields like AI, machine learning, and automation becomes even more critical.

A Brief History of Python: From Simplicity to Power

Python was created in the late 1980s by Guido van Rossum and was first released in 1991. Its design philosophy emphasizes code readability and simplicity, making it accessible for beginners while powerful enough for experts. Over the years, Python has evolved significantly, gaining a vast array of libraries and frameworks that extend its capabilities into various domains, including web development, data science, artificial intelligence (AI), and more. This growth has solidified Python’s position as one of the most popular programming languages in the world.

The language’s ongoing development is driven by a community of dedicated contributors who continually enhance its features. As a result, Python remains relevant in an ever-changing technological landscape, adapting to new challenges and opportunities.

The Popularity Surge: Why Python is Everywhere

The surge in Python’s popularity can be attributed to several factors. First, its clean and readable syntax allows developers to express concepts in fewer lines of code compared to other languages. This simplicity not only makes it easier for newcomers to learn but also enhances productivity for experienced developers. Second, Python’s extensive ecosystem of libraries—such as NumPy for numerical computations and Pandas for data manipulation—enables rapid development across various fields.

Additionally, the rise of data science and AI has further propelled Python into the spotlight, as it is often the language of choice for machine learning applications. The growing number of online courses and tutorials has also made learning Python more accessible than ever before. This accessibility has contributed to a vibrant community that shares knowledge and resources.

Understanding Python’s Core Features

Clean and Readable Syntax: Making Programming Accessible for Beginners

One of Python’s standout features is its clean syntax, which resembles natural language. This design choice reduces the cognitive load on programmers, allowing them to focus on solving problems rather than deciphering complex syntax. The use of indentation to define code blocks instead of braces or keywords also contributes to its readability. This clarity helps beginners grasp programming concepts quickly without feeling overwhelmed. As they progress, they can appreciate how this simplicity leads to more maintainable code in larger projects. Ultimately, this focus on readability fosters a culture of collaboration among developers.

Dynamically Typed: What It Means for Flexibility in Development

Python is dynamically typed, meaning that variable types are determined at runtime rather than in advance. This flexibility allows developers to write code more quickly and adaptively, as they do not need to declare variable types explicitly. However, this feature can also lead to runtime errors if not managed carefully. Developers must be vigilant about ensuring their code behaves as expected since type-related errors may not surface until execution time. Despite this potential pitfall, many find that the benefits of dynamic typing outweigh the drawbacks. This adaptability encourages experimentation and innovation in coding practices.

Applications of Python: From Web Development to AI

Python in Web Development: Frameworks, Libraries, and Tools

Python’s versatility shines in web development through frameworks like Django and Flask. Django is a high-level framework that encourages rapid development and clean design by providing built-in features such as authentication and database management. Flask, on the other hand, is a micro-framework that offers flexibility for smaller applications or services where developers want more control over components. Both frameworks have strong communities that contribute plugins and extensions to enhance functionality. With these tools at their disposal, developers can create robust web applications efficiently while focusing on delivering value to users. This capability allows businesses to launch products faster and respond quickly to market demands.

Unlocking the Power of Python in Data Science and Machine Learning

In data science and machine learning, Python is unmatched due to its rich ecosystem of libraries. Tools like Pandas facilitate data manipulation and analysis, while Scikit-learn provides simple yet effective tools for predictive data analysis. For deep learning applications, libraries like TensorFlow and Keras enable developers to create complex neural networks with ease. The integration of these libraries allows data scientists to streamline workflows from data collection to model deployment seamlessly. As a result, professionals can uncover insights faster than ever before while maintaining high accuracy levels in their predictions.

Why Python is Ideal for Beginners

Easy to Learn, Easy to Master: The Learning Curve of Python

Python’s straightforward syntax makes it an excellent choice for beginners. New programmers can start writing simple scripts within minutes, while more advanced concepts can be mastered over time. This gradual learning curve encourages continuous engagement with programming; learners can build confidence as they tackle progressively challenging projects. Many educational institutions have adopted Python as their primary teaching language due to its accessibility. This trend further reinforces its status as a go-to language for aspiring programmers who want a solid foundation in coding principles.

A Friendly Ecosystem: Python’s Extensive Documentation and Support

The Python community is vast and welcoming, providing extensive documentation and support through forums like Stack Overflow and GitHub. This community-driven approach means that beginners can easily find resources and assistance as they learn. Additionally, numerous online courses cater specifically to newcomers looking to build their skills at their own pace. With countless tutorials available across platforms like YouTube or Coursera, learners have no shortage of options when seeking help or guidance. This supportive environment fosters collaboration among learners at all stages.

The Rich Ecosystem of Python Libraries and Frameworks

Exploring Popular Python Libraries: Pandas, NumPy, Matplotlib, and More

Python offers a rich ecosystem of libraries that simplify complex tasks. Pandas is ideal for data manipulation, while NumPy supports numerical computing with arrays and matrices. For data visualization, Matplotlib enables the creation of a wide range of graphs and charts. SciPy provides essential tools for scientific computing. These libraries save developers time by offering pre-built solutions, and their regular updates ensure access to the latest features, enhancing Python’s capabilities for diverse applications. With such a robust set of tools, Python’s remains a top choice for developers working across various industries.

Python Frameworks: Django and Flask for Web Development

As mentioned earlier, Django provides a robust framework for building web applications quickly with built-in features, while Flask offers simplicity and flexibility for smaller projects. Both frameworks have strong communities that contribute plugins and extensions to enhance functionality. Developers can choose between these frameworks based on their project requirements—whether they need a full-fledged solution or prefer a lightweight option tailored to specific needs. This adaptability makes Python an attractive choice for various web development scenarios where speed and efficiency are crucial.

Advanced Python: Beyond the Basics

Object-Oriented Programming in Python: Creating Scalable and Reusable Code

Python supports object-oriented programming (OOP) principles such as encapsulation, inheritance, and polymorphism. This approach enables developers to create scalable applications by organizing code into reusable classes and objects. OOP helps manage complexity by breaking down programs into smaller components that are easier to understand and maintain over time. By utilizing these principles effectively, developers can build software systems that are both efficient and adaptable to changing requirements while promoting code reuse across projects.

Python for Data Analysis and Visualization: Unlocking Insights from Data

Data analysis in Python is made easy with libraries like Pandas for data manipulation combined with Matplotlib or Seaborn for visualization. This combination allows analysts to extract meaningful insights from complex datasets efficiently while presenting findings clearly through visual storytelling techniques. With powerful visualization tools at their disposal, professionals can present their findings clearly and compellingly—making it easier for stakeholders to understand key trends or patterns in the data while facilitating informed decision-making processes across various industries.

The Future of Python: Why Learning Python is an Investment in Your Career

Python’s Role in Emerging Technologies: AI, IoT, and Cloud Computing

As technology evolves, so does the role of Python. It plays a significant part in emerging fields such as AI, where its libraries facilitate machine learning model development alongside automation tasks across industries like finance or healthcare. Additionally, with the rise of the Internet of Things (IoT) and cloud computing platforms like AWS or Azure supporting Python’s ‘applications, the language continues to be relevant in diverse contexts today. As businesses increasingly adopt these technologies into their operations, having proficiency in Python’s will be crucial for staying competitive in the job market—and opens doors to exciting career opportunities.

High Demand for Python Developers in the Job Market

The demand for skilled Python developers remains high across various industries due to its versatility across multiple domains such as web development or scientific computing applications alike; this demand shows no signs of slowing down anytime soon! Companies are increasingly looking for professionals who can leverage Python’s capabilities in web development, data analysis, automation, and AI solutions effectively within teams striving towards innovation goals together! According to recent job market trends indicating positions requiring knowledge of Python’s consistently rank among the most sought-after roles in tech today—indicating a bright future ahead for those who invest time into mastering this powerful language.

Conclusion

In conclusion, learning Python opens up numerous opportunities across diverse fields—from web development to artificial intelligence—it truly offers something valuable regardless if you’re just starting out or already have experience under your belt! Its clean syntax makes it accessible for beginners while offering powerful features that cater specifically towards advanced developers seeking greater challenges ahead! With a rich ecosystem filled with libraries and frameworks supporting various applications available at your fingertips, mastering Python isn’t just an investment in your skills but also serves as a strategic move within today’s rapidly evolving job market landscape!

Are you ready to elevate your programming skills? At TechnoComet Solutions, we recognize the value of mastering versatile languages like Python. Join us today and unlock a world of opportunities that Python can offer for your career and IT services!

Read More: Click Here

0 notes

Text

youtube

Python Numpy Tutorials

#numpy tutorials#numpy for beginners#numpy arrays#what is array in numpy#numpy full array#how to create numpy full array#what is numpy full array#how to use numpy full array#uses of numpy full array#how to define the shape of numpy array#python for beginners#python full course#numpy full course#numpy python playlist#numpy playlist#complete python numpy tutorials#numpy full array function#python array#python numpy library#how to create arrays in python numpy#Youtube

0 notes

Text

Master NumPy Library for Data Analysis in Python in 10 Minutes

Learn and Become a Master of one of the most used Python tools for Data Analysis.

Introduction:-

NumPy is a python library used for working with arrays.It also has functions for working in domain of linear algebra, fourier transform, and matrices.It is an open source project and you can use it freely. NumPy stands for Numerical Python.

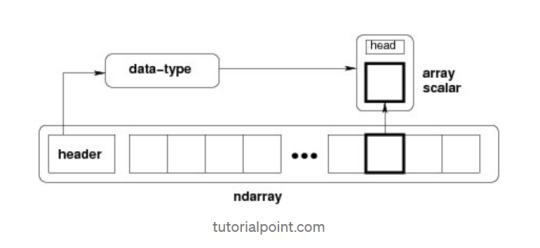

NumPy — Ndarray Object

The most important object defined in NumPy is an N-dimensional array type called ndarray. It describes the collection of items of the same type. Items in the collection can be accessed using a zero-based index.Every item in an ndarray takes the same size of block in the memory.

Each element in ndarray is an object of data-type object (called dtype).Any item extracted from ndarray object (by slicing) is represented by a Python object of one of array scalar types.

The following diagram shows a relationship between ndarray, data type object (dtype) and array scalar type −

It creates an ndarray from any object exposing array interface, or from any method that returns an array.

numpy.array(object, dtype = None, copy = True, order = None, subok = False, ndmin = 0)

The above constructor takes the following parameters −

Object :- Any object exposing the array interface method returns an array, or any (nested) sequence.

Dtype : — Desired data type of array, optional.

Copy :- Optional. By default (true), the object is copied.

Order :- C (row major) or F (column major) or A (any) (default).

Subok :- By default, returned array forced to be a base class array. If true, sub-classes passed through.

ndmin :- Specifies minimum dimensions of resultant array.

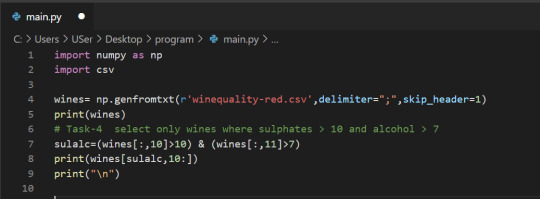

Operations on Numpy Array

In this blog, we’ll walk through using NumPy to analyze data on wine quality. The data contains information on various attributes of wines, such as pH and fixed acidity, along with a quality score between 0 and 10 for each wine. The quality score is the average of at least 3 human taste testers. As we learn how to work with NumPy, we’ll try to figure out more about the perceived quality of wine.

The data was downloaded from the winequality-red.csv, and is available here. file, which we’ll be using throughout this tutorial:

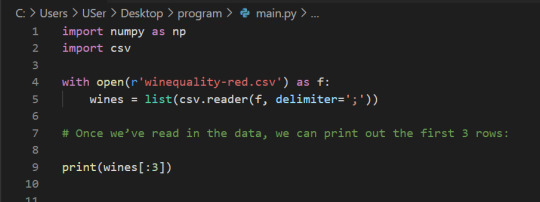

Lists Of Lists for CSV Data

Before using NumPy, we’ll first try to work with the data using Python and the csv package. We can read in the file using the csv.reader object, which will allow us to read in and split up all the content from the ssv file.

In the below code, we:

Import the csv library.

Open the winequality-red.csv file.

With the file open, create a new csv.reader object.

Pass in the keyword argument delimiter=";" to make sure that the records are split up on the semicolon character instead of the default comma character.

Call the list type to get all the rows from the file.

Assign the result to wines.

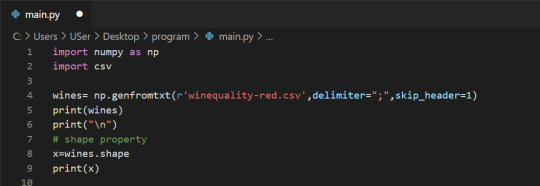

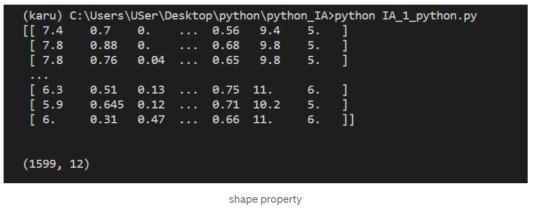

We can check the number of rows and columns in our data using the shape property of NumPy arrays:

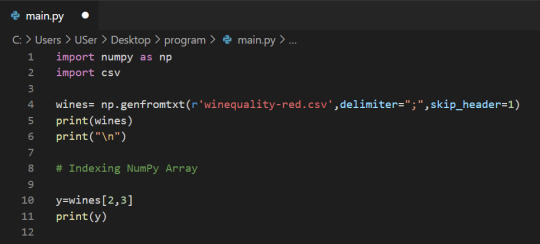

Indexing NumPy Arrays

Let’s select the element at row 3 and column 4. In the below code, we pass in the index 2 as the row index, and the index 3 as the column index. This retrieves the value from the fourth column of the third row:

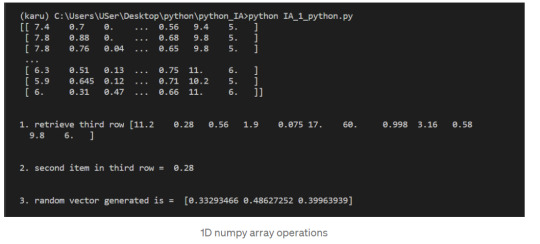

1-Dimensional NumPy Arrays

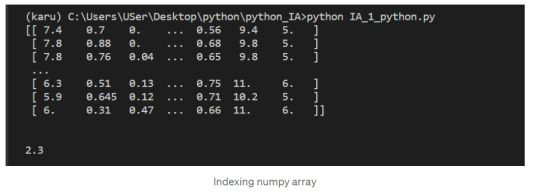

So far, we’ve worked with 2-dimensional arrays, such as wines. However, NumPy is a package for working with multidimensional arrays. One of the most common types of multidimensional arrays is the 1-dimensional array, or vector.

1.Just like a list of lists is analogous to a 2-dimensional array, a single list is analogous to a 1-dimensional array. If we slice wines and only retrieve the third row, we get a 1-dimensional array:

2. We can retrieve individual elements from third_wine using a single index. The below code will display the second item in third_wine:

3. Most NumPy functions that we’ve worked with, such as numpy.random.rand, can be used with multidimensional arrays. Here’s how we’d use numpy.random.rand to generate a random vector:

After successfully reading our dataset and learning about List, Indexing, & 1D array in NumPy we can start performing the operation on it.

The first element of each row is the fixed acidity, the second is the volatile ,acidity, and so on. We can find the average quality of the wines. The below code will:

Extract the last element from each row after the header row.

Convert each extracted element to a float.

Assign all the extracted elements to the list qualities.

Divide the sum of all the elements in qualities by the total number of elements in qualities to the get the mean.

NumPy Array Methods

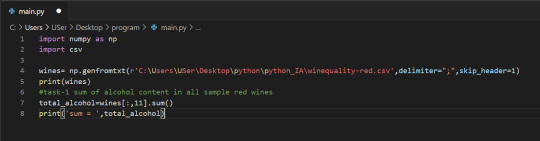

In addition to the common mathematical operations, NumPy also has several methods that you can use for more complex calculations on arrays. An example of this is the numpy.ndarray.sum method. This finds the sum of all the elements in an array by default:

2. Sum of alcohol content in all sample red wines

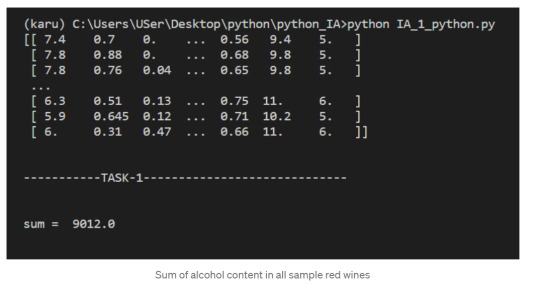

NumPy Array Comparisons

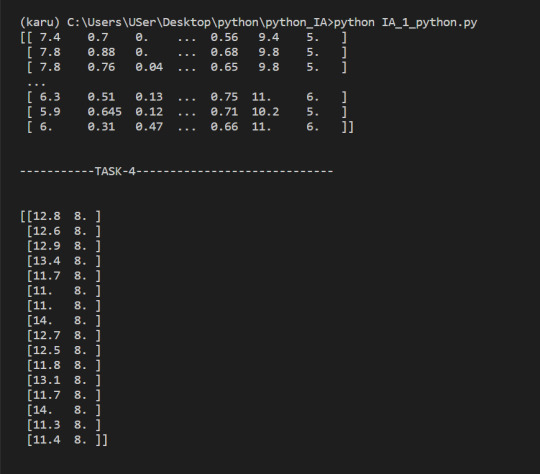

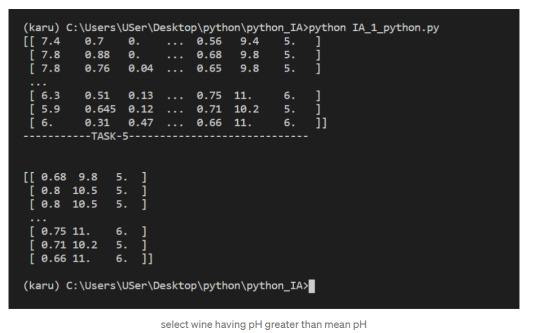

We get a Boolean array that tells us which of the wines have a quality rating greater than 5. We can do something similar with the other operators. For instance, we can see if any wines have a quality rating equal to 10:

3. select wines having pH content > 5

Subsetting

We select only the rows where high_Quality contains a True value, and all of the columns. This subsetting makes it simple to filter arrays for certain criteria. For example, we can look for wines with a lot of alcohol and high quality. In order to specify multiple conditions, we have to place each condition in parentheses, and separate conditions with an ampersand (&):

4. Select only wines where sulphates >10 and alcohol >7

5. select wine having pH greater than mean pH

We have seen what NumPy is, and some of its most basic uses. In the following posts we will see more complex functionalities and dig deeper into the workings of this fantastic library!

To check it out follow me on tumblr, and stay tuned!

That is all, I hope you liked the post. Feel Free to follow me on tumblr

Also, you can take a look at my other posts on Data Science and Machine Learning here. Have a good read!

1 note

·

View note

Text

Running a Random Forest

Hey guy’s welcome back in previous blog we have seen that, how to run classification trees in python you can check it here. In this blog you are going to learn how to run Random Forest using python.

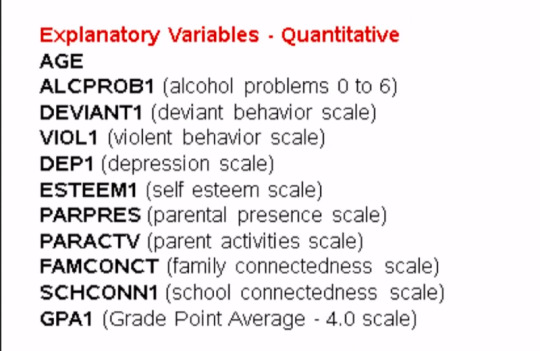

So now let's see how to generate a random forest with Python. Again, I'm going to use the Wave One, Add Health Survey that I have data managed for the purpose of growing decision trees. You'll recall that there are several variables. Again, we'll define the response or target variable, regular smoking, based on answers to the question, have you ever smoked cigarettes regularly? That is, at least one cigarette every day for 30 days.

The candidate explanatory variables include gender, race, alcohol, marijuana, cocaine, or inhalant use. Availability of cigarettes in the home, whether or not either parent was on public assistance, any experience with being expelled from school, age, alcohol problems, deviance, violence, depression, self esteem, parental presence, activities with parents family and school connectedness and grade point average.

Much of the code that we'll write for our random forest will be quite similar to the code we had written for individual decision trees.

First there are a number of libraries that we need to call in, including features from the sklearn library.

from pandas import Series, DataFrame import pandas as pd import numpy as np import os import matplotlib.pylab as plt from sklearn.cross_validation import train_test_split from sklearn.tree import DecisionTreeClassifier from sklearn.metrics import classification_report import sklearn.metrics # Feature Importance from sklearn import datasets from sklearn.ensemble import ExtraTreesClassifier

Next I'm going to use the change working directory function from the OS library to indicate where my data set is located.

os.chdir("C:\TREES")

Next I'll load my data set called tree_addhealth.csv. because decision tree analyses cannot handle any NAs in our data set, my next step is to create a clean data frame that drops all NAs. Setting the new data frame called data_clean I can now take a look at various characteristics of my data, by using the D types and describe functions to examine data types and summary statistics.

#Load the dataset

AH_data = pd.read_csv("tree_addhealth.csv") data_clean = AH_data.dropna()

data_clean.dtypes data_clean.describe()

Next I set my explanatory and response, or target variables, and then include the train test split function for predictors and target. And set the size ratio to 60% for the training sample, and 40% for the test sample by indicating test_size=.4.

#Split into training and testing sets

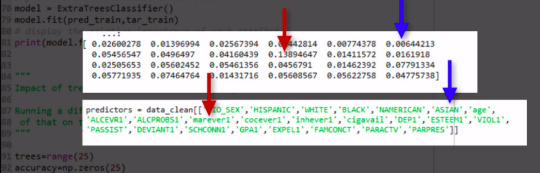

predictors = data_clean[['BIO_SEX','HISPANIC','WHITE','BLACK','NAMERICAN','ASIAN','age', 'ALCEVR1','ALCPROBS1','marever1','cocever1','inhever1','cigavail','DEP1','ESTEEM1','VIOL1', 'PASSIST','DEVIANT1','SCHCONN1','GPA1','EXPEL1','FAMCONCT','PARACTV','PARPRES']]

targets = data_clean.TREG1

Here I request the shape of these predictor and target and training test samples.

pred_train.shape pred_test.shape tar_train.shape tar_test.shape

From sklearn.ensamble I import the RandomForestClassifier

#Build model on training data from sklearn.ensemble import RandomForestClassifier

Now that training and test data sets have already been created, we'll initialize the random forest classifier from SK Learn and indicate n_estimators=25. n_estimators are the number of trees you would build with the random forest algorithm.

classifier=RandomForestClassifier(n_estimators=25)

Next I actually fit the model with the classifier.fit function which we passed the training predictors and training targets too.

classifier=classifier.fit(pred_train,tar_train)

Then, we go unto the prediction on the testator set. And we could also similar to decision tree code as for the confusion matrix and accuracy scores.

predictions=classifier.predict(pred_test)

sklearn.metrics.confusion_matrix(tar_test,predictions) sklearn.metrics.accuracy_score(tar_test, predictions)

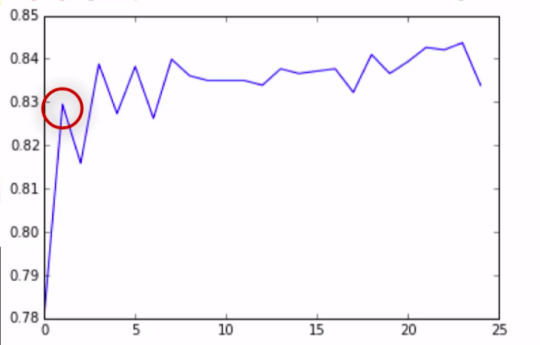

For the confusion matrix, we see the true negatives and true positives on the diagonal. And the 207 and the 82 represent the false negatives and false positives, respectively. Notice that the overall accuracy for the forest is 0.84. So 84% of the individuals were classified correctly, as regular smokers, or not regular smokers.

Given that we don't interpret individual trees in a random forest, the most helpful information to be gotten from a forest is arguably the measured importance for each explanatory variable. Also called the features. Based on how many votes or splits each has produced in the 25 tree ensemble. To generate importance scores, we initialize the extra tree classifier, and then fit a model. Finally, we ask Python to print the feature importance scores calculated from the forest of trees that we've grown.

# fit an Extra Trees model to the data model = ExtraTreesClassifier() model.fit(pred_train,tar_train) # display the relative importance of each attribute print(model.feature_importances_)

The variables are listed in the order they've been named earlier in the code. Starting with gender, called BIO_SEX, and ending with parental presence. As we can see the variables with the highest important score at 0.13 is marijuana use. And the variable with the lowest important score is Asian ethnicity at .006.

As you will recall, the correct classification rate for the random forest was 84%. So were 25 trees actually needed to get this correct rate of classification? To determine what growing larger number of trees has brought us in terms of correct classification. We're going to use code that builds for us different numbers of trees, from one to 25, and provides the correct classification rate for each. This code will build for us random forest classifier from one to 25, and then finding the accuracy score for each of those trees from one to 25, and storing it in an array. This will give me 25 different accuracy values. And we'll plot them as the number of trees increase.

""" Running a different number of trees and see the effect of that on the accuracy of the prediction """

trees=range(25) accuracy=np.zeros(25)

for idx in range(len(trees)): classifier=RandomForestClassifier(n_estimators=idx + 1) classifier=classifier.fit(pred_train,tar_train) predictions=classifier.predict(pred_test) accuracy[idx]=sklearn.metrics.accuracy_score(tar_test, predictions)

plt.cla() plt.plot(trees, accuracy)

As you can see, with only one tree the accuracy is about 83%, and it climbs to only about 84% with successive trees that are grown giving us some confidence that it may be perfectly appropriate to interpret a single decision tree for this data. Given that it's accuracy is quite near that of successive trees in the forest.

To summarize, like decision trees, random forests are a type of data mining algorithm that can select from among a large number of variables. Those that are most important in determining the target or response variable to be explained.

Also light decision trees. The target variable in a random forest can be categorical or quantitative. And the group of explanatory variables or features can be categorical and quantitative in any combination. Unlike decision trees however, the results of random forests often generalize well to new data.

Since the strongest signals are able to emerge through the growing of many trees. Further, small changes in the data do not impact the results of a random forest. In my opinion, the main weakness of random forests is simply that the results are less satisfying, since no trees are actually interpreted. Instead, the forest of trees is used to rank the importance of variables in predicting the target.

Thus we get a sense of the most important predictive variables but not necessarily their relationships to one another.

Complete Code

from pandas import Series, DataFrame import pandas as pd import numpy as np import os import matplotlib.pylab as plt from sklearn.cross_validation import train_test_split from sklearn.tree import DecisionTreeClassifier from sklearn.metrics import classification_report import sklearn.metrics # Feature Importance from sklearn import datasets from sklearn.ensemble import ExtraTreesClassifier

os.chdir("C:\TREES")

#Load the dataset

AH_data = pd.read_csv("tree_addhealth.csv") data_clean = AH_data.dropna()

data_clean.dtypes data_clean.describe()

#Split into training and testing sets

predictors = data_clean[['BIO_SEX','HISPANIC','WHITE','BLACK','NAMERICAN','ASIAN','age', 'ALCEVR1','ALCPROBS1','marever1','cocever1','inhever1','cigavail','DEP1','ESTEEM1','VIOL1', 'PASSIST','DEVIANT1','SCHCONN1','GPA1','EXPEL1','FAMCONCT','PARACTV','PARPRES']]

targets = data_clean.TREG1

pred_train, pred_test, tar_train, tar_test = train_test_split(predictors, targets, test_size=.4)

pred_train.shape pred_test.shape tar_train.shape tar_test.shape

#Build model on training data from sklearn.ensemble import RandomForestClassifier

classifier=RandomForestClassifier(n_estimators=25) classifier=classifier.fit(pred_train,tar_train)

predictions=classifier.predict(pred_test)

sklearn.metrics.confusion_matrix(tar_test,predictions) sklearn.metrics.accuracy_score(tar_test, predictions)

# fit an Extra Trees model to the data model = ExtraTreesClassifier() model.fit(pred_train,tar_train) # display the relative importance of each attribute print(model.feature_importances_)

""" Running a different number of trees and see the effect of that on the accuracy of the prediction """

trees=range(25) accuracy=np.zeros(25)

for idx in range(len(trees)): classifier=RandomForestClassifier(n_estimators=idx + 1) classifier=classifier.fit(pred_train,tar_train) predictions=classifier.predict(pred_test) accuracy[idx]=sklearn.metrics.accuracy_score(tar_test, predictions)

plt.cla() plt.plot(trees, accuracy)

If you are still here, I appreciate that, and see you guy’s next time. ✌️

1 note

·

View note

Text

NUMPY ARRAY

Python includes a large number of libraries that may be used to execute a variety of tasks. The libraries are organized into groups based on the task at hand. Python is a fantastic programming language that provides the ideal environment for doing various scientific and mathematical calculations. Numpy, a popular Python library, is an example of such a library. It's a Python-based open-source toolkit for conducting computations in the engineering and scientific domains.

Numpy is a Python package that contains multidimensional arrays and matrix data structures. The ndarray object is a homogenous array object provided by the library. In Python, the Numpy array has the shape of an n-dimensional array. There are also other methods in the library that can be used to execute operations on the array. The library may be used to execute a variety of mathematical operations on the array as well. Python can be enhanced with data structures that will allow for the efficient calculation of various matrices and arrays. The library also includes a number of mathematical functions that can be used to manipulate matrices and arrays.

The library's installation and import

A Python distribution of scientific origin should be used to install Numpy in Python. The library may be installed with the following command if the machine already has Python installed.

Numpy can be installed with Conda or by using the pip command.

Anaconda, which is one of the easiest ways to install Python, can be used if it hasn't been installed yet on the machine. Other libraries or packages, such as SciPy, Numpy, Scikit-learn, pandas, and others, do not need to be installed individually when installing Anaconda.

The command import Numpy as np can be used to import the Numpy library into Python.

The module includes numerous methods for quickly and efficiently creating arrays in Python. It also allows you to change the data within the arrays or modify the arrays themselves. The distinction between a list and a Numpy array in Python is that the data in a Python list can be of different data types, whereas the items in a Numpy array in Python should be homogeneous. Within the Numpy array, the items have the same data types. The mathematical functions that could be applied over the Numpy array would become inefficient if the elements in the Numpy array were of different data types.

Python Numpy Array

Within the Numpy library, the Numpy array is a centralized data structure. When an array is defined, it is made up of arrays that are arranged in a grid and hold raw data information. It also offers instructions on how to locate an element in an array and how to interpret an element in an array. The Numpy array is made up of grid elements that can be indexed in a variety of ways. The array's elements all have the same data type, hence they're referred to as array dtype.

· The array's index is determined by a tuple of non-negative integers. Integers, Booleans, and other arrays can also be used to index it.

· The dimension number of an array is used to determine its rank.

· An array's form is defined as the set of numbers that specify the array's size in each dimension.

· For high-dimensional data, a Python list with nested lists can be used to initialize the arrays.

· Square brackets can be used to access the items of the array. The indexing of the Numpy array always starts with 0, therefore when accessing the elements, the array's first element will be at the 0 position.

The Numpy array's basic operations

· In Python, the function np.array() is used to create a Numpy array. The user must first generate an array before passing it to a list. In the list, the user can also specify the data type.

· In Python, the function np.sort() can be used to sort a Numpy array. When the function is invoked, the user can define the kind, axis, and order.

· Users can use ndarray.ndim to retrieve information about the array's dimensions or axis number. Using ndarray.size also informs the user of the total number of elements in the array.

· Arrays in Numpy can be indexed and sliced in the same manner that lists in Python can.

· The sign "+" can be used to join two arrays together. Additionally, the sum() function can be used to return the sum of all the entries in an array. The function can be applied to arrays with one, two, or even three dimensions.

· Operations can be carried out over arrays of various forms using the idea of broadcasting in a Numpy array. The array dimensions, however, must be compatible; otherwise, the application will throw a ValueError.

0 notes

Text

My Programming Journey: Machine Learning using Python

After learning how to code in SAIT I decided I wanted to take things to the next level with my programming skills and try Machine Learning by myself, given that Artificial Intelligence has always been a really intriguing topic for me and I like a good old challenge to improve, grow and, most importantly, learn new things.

A lot is said about Machine Learning, but we can define it as algorithms that gather/analyze data and learn automatically through experience without being programmed to do so. The learning is done by identifying patterns and making decisions or predictions based on the data.

ML is not a new technology but recently has been growing rapidly and being used in everyday life. Examples that we are all familiar with are:

-Predictions in Search Engines

-Content recommendations from stream services or social media platforms

-Spam detection in emails

-Self-driving cars

-Virtual assistants

The Importance of Machine Learning

The vast amount of data available and processing power in modern technology makes it the perfect environment to train machine-learning models and by building precise models an organization can make better decisions. Most industries that deal with big data recognize the value of ML technology. In the health care industry, this technology has made it easier to diagnose patients, provide treatment plans and even perform precision surgeries. Government agencies and financial services also benefit from machine-learning since they deal with large amounts of data. This data is often used for insights and security, like preventing identity theft and fraud.

Other things like speech and language recognition, facial recognition for surveillance, transcription and translation of speech and computer vision are all thanks to machine learning, and the list goes on.

Types of Machine Learning

Usually, machine learning is divided into three categories: supervised learning, unsupervised learning and reinforcement learning.

Supervised learning

For this approach, machines are exposed to labelled data and learn by example. The algorithm receives a set of inputs with the correct outputs and learns by comparing its output with the correct output and modify the model as needed. The most common supervised learning algorithms are classification, regression and artificial neural networks.

Unsupervised learning

Unlike the previous approach, the unsupervised learning method takes unlabelled data so that the algorithm can identify patterns in data. Popular algorithms include nearest-neighbor mapping and k-means clustering.

Reinforcement learning

This approach consists of the algorithm interacting with the environment to find the actions that give the biggest rewards as feedback.

Iris Flower Classification Project

The iris flower problem is the “Hello World” of machine learning.

Iris flowers in Vancouver Park by Kevin Castel in Unsplash - https://unsplash.com/photos/y4xISRK8TUg

I felt tempted to try a machine learning project that I knew was too advanced for me and flex a little or fail miserably, but I decided to start with this iconic project instead. The project consists of classifying iris flowers among three species from measurements of sepals and petals’ length and width. The iris dataset contains 3 classes of 50 instances each, with classes referring to a type of iris flower.

Before Starting the Project

For this project, and for the rest of my machine learning journey, I decided to install Anaconda (Python 3.8 distribution), a package management service that comes with the best python libraries for data science and with many editors, including Visual Studio Code, the editor I will be using for this demonstration.

You can get Anaconda here: https://repo.anaconda.com/archive/Anaconda3-2021.05-Windows-x86_64.exe

I find that Anaconda makes it easy to access Python environments and it contains a lot of other programs to code and practice, as well as nice tutorials to get acquainted with the software.

In the following videos, I will be going step by step on the development of this project:

youtube

youtube

If videos aren't your thing, the readable step-by-step instructions are here in the post:

Prior to this project, I already had Visual Studio Code installed on my computer since I had used it for previous semesters. From VSCode I installed Python and then I selected a Python interpreter. The Python interpreter I will use is Anaconda, because it has all the dependencies I need for coding.

Getting Started

I configured Visual Studio Code to be able to develop in Python, then I created a python file named ml-iris.py and imported the libraries, which are the following:

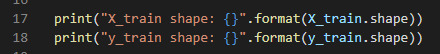

The main libraries I will be using are numpyand sklearn.

· Numpy supports large, multidimensional arrays and a vast collection of mathematical operations to apply on these arrays.

· Sklearn includes various algorithms like classification, regression and clustering, the dataset used is embedded in sklearn.

From the previously mentioned libraries, I am using the following methods:

· load_iris is the data source of the Iris Flowers dataset

· train_test_split is the method to split the dataset

· KNeighborsClassifier is the method to classify the dataset.

I will be applying a supervised learning type of ML to this project: I already know the measurements of the three kinds of iris species; setosa, versicolor or virginica. From these measurements, I know which species each flower belongs to. So, what I want is to build a model that learns from the features of these already known irises and then predicts one of the species of iris when given new data, this means new irises flowers.

Now I start building the model:

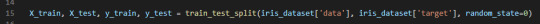

The first step (line 6), I define the dataset by loading the load_iris() dataset from Sklearn.

Next step (line 7), I retrieve the target names information from the dataset, then format it and print it.

I repeat this step for retrieval of feature names, type and shape of data, type and shape of target, as well as target information (lines 8 to 13).

Target names should be Setosa, Versicolor and Virginica.

Feature names should be sepal length, sepal width, petal length and petal width.

Type of data should be a numpy array.

Shape of data is the shape of the array, which is 150 samples with 4 features each.

Type of target is a numpy array.

Shape of target is 150 samples.

The target is the list of all the samples, identified as numbers from 0 to 2.

· Setosa (0)

· Versicolor(1)

· Virginica(2)

Now I need to test the performance of my model, so I will show it new data, already labelled.

For this, I need to split the labelled data into two parts. One part will be used to train the model and the rest of the data will be used to test how the model works.

This is done by using the train_test_split function. The training set is x_train and y_train. The testing set is x_test and y_test. These sets are defined by calling the previously mentioned function (line 15). The arguments for the function are samples (data), features (target) and a random seed. This should return 4 datasets.

The function extracts ¾ of the labelled data as the training set and the remainder ¼ of the labelled data will be used as the test set.

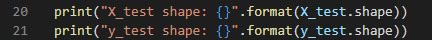

Then I will print the shape of the training sets:

And the shape of the testing sets:

Now that the training and testing sets are defined, I can start building the model.

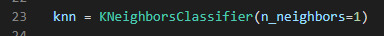

For this, I will use the K nearest neighbors classifier, from the Sklearn library.

This algorithm classifies a data point by its neighbors (line 23), with the data point being allocated to the class most common among its K nearest neighbors. The model can make a prediction by using the majority class among the neighbors. The k is a user-defined constant (I used 1), and a new data point is classified by assigning the label which is most frequent among the k training samples nearest to that data point.

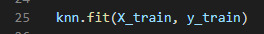

By using the fit method of the knn object (K Nearest Neighbor Classifier), I am building the model on the training set (line 25). This allows me to make predictions on any new data that comes unlabelled.

Here I create new data (line 27), sepal length(5), sepal width(2.9), petal length(1) and petal witdth(0.2) and put it into an array, calculate its shape and print it (line 28), which should be 1,4. The 1 being the number of samples and 4 being the number of features.

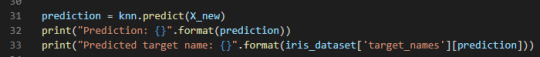

Then I call the predict method of the knn object on the new data:

The model predicts in which class this new data belongs, prints the prediction and the predicted target name (line 32, 32).

Now I have to measure the model to make sure that it works and I can trust its results. Using the testing set, my model can make a prediction for each iris it contains and I can compare it against its label. To do this I need the accuracy.

I use the predict method of the knn object on the testing dataset (line 36) and then I print the predictions of the test set (line 37). By implementing the “mean” method of the Numpy library, I can compare the predictions to the testing set (line 38), getting the score or accuracy of the test set. In line 39 I’m also getting the test set accuracy using the “score” method of the knn object.

Now that I have my code ready, I should execute it and see what it comes up with.

To run this file, I opened the Command Line embedded in Anaconda Navigator.

I could start the command line regularly but by starting it from Anaconda, my Python environment is already activated. Once in the Command Line I type in this command:

C:/path/to/Anaconda3/python.exe "c:/path/to/file/ml-iris.py"

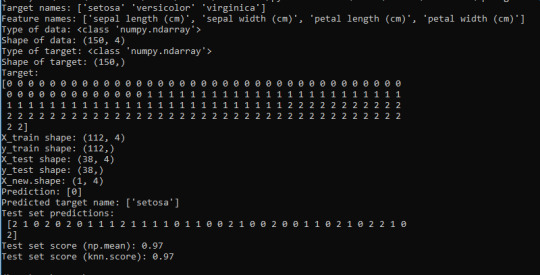

And this is my result:

The new data I added was put into the class 0 (Setosa). And the Test set scores have a 97% accuracy score, meaning that it will be correct 97% of the time when predicting new iris flowers from new data.

My model works and it classified all of these flowers.

References

· https://docs.python.org/3/tutorial/interpreter.html

· https://unsplash.com/photos/y4xISRK8TUg

· https://en.wikipedia.org/wiki/K-nearest_neighbors_algorithm

· https://www.sas.com/en_ca/insights/analytics/machine-learning.html

· https://en.wikipedia.org/wiki/Machine_learning

· https://medium.com/gft-engineering/start-to-learn-machine-learning-with-the-iris-flower-classification-challenge-4859a920e5e3

0 notes

Text

How to Install TensorFlow and Use TensorFlow

In today’s blog, we will discuss the basics of TensorFlow, its introduction, and its uses.

Introduction

Google developed the most well known deep learning framework TensorFlow. However, Google utilizes artificial intelligence (AI) in the entirety of its items to improve internet searches, interpretation, and picture recognition.

To give a robust model, Google clients can encounter a quicker and increasingly refined inquiry with AI. When the client types a catchphrase in the search bar, Google suggests what could be the following word.

Google needs to utilize AI to exploit its complex datasets to give clients the best understanding. Three unique gatherings use AI:

Researchers

Data scientists

Software engineers.

We would all be able to utilize the equivalent toolset with each other and improve their effectiveness.

Google doesn’t simply have any information; they also have the world’s most powerful server PCs. So that TensorFlow was worked to scale. TensorFlow is the ML framework that is created and developed by the Google Brain Team to accelerate machine learning and in-depth neural network research.

TensorFlow was designed to run on different CPUs or GPUs and even portable working frameworks. It has a few wrappers in a few languages, such as Python, C++, or Java.

In This Instructional Exercise, You Will Learn

What is TensorFlow?

History of TensorFlow

TensorFlow architecture

Where can Tensorflow run?

Prologue to components of TensorFlow

What is the reason, well known TensorFlow?

Rundown of prominent algorithms upheld by TensorFlow

Straightforward TensorFlow example

Alternatives to load data into TensorFlow

Make Tensorflow pipeline

History of TensorFlow

Two or three years back, profound learning began to outflank all other AI calculations when giving a large amount of data. Google realized that it could utilize these profound neural network to improve its administrations:

Gmail

Photograph

Google web index

They constructed a structure called Tensorflow to let scientists and engineers cooperate on an AI model. When created and scaled, it enables the bunches of individuals to utilize it.

It was first made open in late 2015, while the primary stable rendition showed up in 2017. It is open source under Apache Open Source permit. Also, you can utilize it, alter it, and redistribute the adjusted rendition for a charge without paying anything to Google.

TensorFlow architecture

Tensorflow design works in three sections:

Preprocessing the information

Fabricate the model

Train and gauge the model

It is called TensorFlow because it accepts contribution as a multi-dimensional array. This multi-dimensional array called tensors. You can develop a kind of flowchart of activities (called a graph) that you need to perform on that information. The information goes in toward one side, and afterward, it moves through this arrangement of different tasks and turns out the opposite end as yield.

Where Can Tensorflow Run?

TensorFlow equipment and programming necessities are grouped into:

Advancement phase: This is the point at which you train the model. And, later on, on your desktop or PC, the testing is typically done.

Run phase or inference phase: Once preparing is done, TensorFlow can be run on a wide range of stages. You can run it on work area running Windows, macOS, or Linux Cloud as a web administrator or on cell phones like iOS and Android.

You can prepare it on various machines; then, you can run it on an alternate machine when you have the prepared model.

The model can be prepared and run on GPUs and CPUs. GPUs were at first intended for computer games. In late 2010, Stanford specialists found that GPU was likewise generally excellent at grid tasks and variable based math, so it makes them quick for doing these sorts of computations. Profound learning depends on a great deal of grid augmentation. TensorFlow is quick at figuring the lattice duplication since it is written in C++. In spite of the fact that it is actualized in C++, TensorFlow can be used to and constrained by different languages basically, Python.

At last, a unique element of TensorFlow is the TensorBoard. The TensorBoard empowers to show graphically and outwardly what TensorFlow is doing.

Prologue to Components of TensorFlowTensor

Based on its center structure, “Tensor,” the name Tensorflow is derived. In TensorFlow, every one of the calculations includes tensors. A tensor is a vector or framework of n-measurements that speaks to a wide range of information. All qualities in a tensor hold an indistinguishable information type with a known (or halfway known) shape. The state of the information is the dimensionality of the network or cluster.

Based on the information or the consequence of a calculation a tensor is started. In TensorFlow, every one of the tasks is led inside a chart. The diagram is a lot of calculation that happens progressively.

The diagram plots the operations and associations between the hubs, and it may not show the qualities. The edge of the hubs is the tensor, i.e., an approach to populate the activity with information.

Diagrams

TensorFlow utilizes a diagram structure. The chart accumulates and depicts all the arrangement calculations done during the preparation. The diagram has bunches of focal points:

It was done to run on different CPUs or GPUs and even portable working framework.

The movability of the diagram permits us to save the calculations for quick or later use. The chart usually spared and executed later on.

Every one of the calculations in the diagram is finished by associating tensors together.

A tensor has a hub and an edge. The hub conveys scientific activity and produces endpoints yields. The edges clarify the info/yield connections between hubs.

For What Reason Is TensorFlow Well Known?

TensorFlow is the best framework for all; it is worked to be open for everybody. TensorFlow library consolidates various APIs to work at scale with profound learning engineerings like CNN or RNN. Moreover, it enables the engineer to picture the development of the neural system with Tensorboard. Also, this device is useful to troubleshoot the program. At last, TensorFlow is worked to be sent at scale. It runs on CPU and GPU.

Furthermore, TensorFlow pulls in the biggest notoriety on GitHub contrast with the other profound learning structure.

Rundown of Prominent Algorithms bolstered by TensorFlow

At this time, TensorFlow 1.10 has a worked in API for:

Direct relapse: tf.estimator.LinearRegressor

Classification:tf.estimator.LinearClassifier

Profound learning grouping: tf.estimator.DNNClassifier

Profound learning wipe and profound: tf.estimator.DNNLinearCombinedClassifier

Sponsor tree relapse: tf.estimator.BoostedTreesRegressor

Supported tree characterization: tf.estimator.BoostedTreesClassifier

Basic TensorFlow Example

import numpy as np

import TensorFlow as tf

In the initial two lines of code, we have imported TensorFlow as tf. With Python, it is a typical practice to utilize a short name for a library. The bit of leeway is to maintain a strategic distance from the complete name of the library when we have to utilize it. For example, we can import TensorFlow as tf, and call tf when we need to utilize a Tensorflow capacity.

Let ‘s practice the basic work process of TensorFlow with a basic model. Subsequently, we will make a computational diagram that increases two numbers together.

During the model, we will increase X_1 and X_2 together. TensorFlow will make a hub to associate the activity. It is called a duplicate in our case. At the point where the chart resolves, TensorFlow computational motors increase X_1 and X_2 together.

At last, we will run a TensorFlow session that will run the computational chart with the estimations of X_1 and X_2 and print the aftereffect of the augmentation.

Let ‘s characterize the X_1 and X_2 input hubs. At the point when we make a hub in TensorFlow, we need to pick what sort of hub to make. The X1 and X2 hubs will be a placeholder hub. The placeholder relegates another worth each time we make a count. We will make them as a TF speck placeholder hub.

Stage 1: Define the Variable

X_1 = tf.placeholder(tf.float32, name = “X_1”)

X_2 = tf.placeholder(tf.float32, name = “X_2”)

At the point when we make a placeholder hub, we need to go in the information type will include numbers here so we can utilize a coasting point information type, how about we use tf.float32. We likewise need to give this hub a name. This name will show up when we take a gander at the graphical perceptions of our model. How about we name this hub X_1 by going in a parameter called name with an estimation of X_1 and now how about we characterize X_2 a similar way. X_2.

Stage 2: Define the Calculation

increase = tf.multiply(X_1, X_2, name = “duplicate”)

Presently we can characterize the hub that does the augmentation activity. In TensorFlow, we can do that by making a tf.multiply hub.

We will go in the X_1 and X_2 hubs to the augmentation hub. It advises TensorFlow to connect those hubs in the computational diagram, so we are requesting that they pull the qualities from x and y and increase the outcome. How about we likewise give the augmentation hub the name increase. It is the whole definition of our straightforward computational diagram.

Stage 3: Execute the Activity

Finally, to execute activities in the chart, we need to make a session. In TensorFlow, tf.Session() executes the command to end the program. Since we have a session, we can request that the session show tasks on our computational chart to calling sessions. Hence, to run the calculation, we have to utilize run.

At the point when the expansion activity runs, it will see that it needs to get the estimations of the X_1 and X_2 hubs, so we additionally need to sustain in esteems for X_1 and X_2. We can do that by providing a parameter called feed_dict, pass 1, 2, 3 for X_1 and 4, 5, 6 for X_2.

Finally, we should see 4, 10, and 18 for 1×4, 2×5 and 3×6

X_1 = tf.placeholder(tf.float32, name = “X_1”)

X_2 = tf.placeholder(tf.float32, name = “X_2”)

duplicate = tf.multiply(X_1, X_2, name = “increase”)

with tf.Session() as session:

result = session.run(multiply, feed_dict={X_1:[1,2,3], X_2:[4,5,6]})

Please feel free to leave your valubale feedbak and comments in the section below.

To know more about our services, please visit Loginworks Softwares Inc.

0 notes

Text

350+ TOP PYTHON Interview Questions and Answers

PYTHON Interview Questions for freshers & experienced :-

1) What Is Python? Python is an interpreted, interactive, object-oriented programming language. It incorporates modules, exceptions, dynamic typing, very high level dynamic data types, and classes. Python combines remarkable power with very clear syntax. It has interfaces to many system calls and libraries, as well as to various window systems, and is extensible in C or C++. It is also usable as an extension language for applications that need a programmable interface. Finally, Python is portable: it runs on many Unix variants, on the Mac, and on PCs under MS-DOS, Windows, Windows NT, and OS/2. 2) What are the different ways to create an empty NumPy array in python? There are two methods we can apply to create empty NumPy arrays. The first method. import numpy numpy.array() The second method. # Make an empty NumPy array numpy.empty(shape=(0,0)) 3) Can’t concat bytes to str? This is providing to be a rough transition to python on here f = open( ‘myfile’, ‘a+’ ) f.write(‘test string’ + ‘\n’) key = “pass:hello” plaintext = subprocess.check_output() print (plaintext) f.write (plaintext + ‘\n’) f.close() The output file looks like: test string 4) Expline different way to trigger/ raise exception in your python script? Raise used to manually raise an exception general-form: raise exception-name (“message to be conveyed”). voting_age = 15 if voting_age output: ValueError: voting age should be at least 19 and above 2.assert statements are used to tell your program to test that condition attached to assert keyword, and trigger an exception whenever the condition becomes false. Eg: a = -10 assert a > 0 #to raise an exception whenever a is a negative number Output: AssertionError Another way of raising an exception can be done by making a programming mistake, but that is not usually a good way of triggering an exception 5) Why is not__getattr__invoked when attr==’__str__’? The base class object already implements a default __str__ method, and __getattr__function is called for missing attributes. The example as it we must use the __getattribute__ method instead, but beware of the dangers. class GetAttr(object): def __getattribute__(self, attr): print(‘getattr: ‘ + attr) if attr == ‘__str__’: return lambda: ‘’ else: return lambda *args: None A better and more readable solution to simply override the __str__ method explicitly. class GetAttr(object): def __getattr__(self, attr): print(‘getattr: ‘ + attr) return lambda *args: None def __str__(self): return ‘’ 6)What do you mean by list comprehension? The process of creating a list performing some operation on the data so that can be accessed using an iterator is referred to as list comprehension. EX: Output: 65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90 7) What will be the output of the code:def foo (i=)? i.append (1) return i >>> foo () >>> foo () Output: The argument to the function foo is evaluated once when the function is defined However since it is a list on every all the list is modified by appending a 1 to it. 8) How to Tic tac toe computer move? Below The code of computer move in the game tic tac toe in python def computermove(board,computer,human): movecom=” rmoves=rd(0,8) for movecom in legalmoves(board): board=computer if winner(board)==computer: return movecom board=” for movecom in legalmoves(board): board=human if winner(board)==human: return movecom board=” while rmoves not in legalmoves(board): rtmoves=rd(0,8) return rmoves 9) Explain about ODBC and python? ODBC (Open Database Connectivity) API standard allows the connections with any database that supports the interface such as the PostgreSL database or Microsoft access in a transparent manner Three types of ODBC modules for python: PythonWin ODBC module – limited development mxODBC – a commercial product pyodbc – This is open source python package 10) How to implement the decorator function, using dollar ()? Code: def dollar(fn): def new(*args): return ‘$’ + str(fn(*args)) return new @dollar def price(amount, tax_rate): return amount + amount*tax_rate print price(100,0.1) output: $110